SQL Server In-Memory OLTP (Online Transaction Processing) is a feature that allows for faster performance of certain types of workloads in SQL Server by storing data and indexes in memory rather than on disk. This can significantly improve the speed of transactions, especially for high-concurrency workloads that involve a lot of insert, update, and delete operations.

In-Memory OLTP introduces two new types of tables: Memory-Optimized Tables (MOTs) and Natively Compiled Stored Procedures. Memory-Optimized Tables are designed to be fully resident in memory and use lock- and latch-free data structures to ensure high concurrency and low contention. Natively Compiled Stored Procedures are compiled to native code and are optimized for execution in memory. These stored procedures can access Memory-Optimized Tables and traditional disk-based tables, and can also be used to implement complex business logic.

SQL Server 2014 is the minimum requirement for In-Memory OLTP. It can be used in all editions, including Express, Standard, and Enterprise. In-Memory OLTP is not a replacement for traditional disk-based tables. It should only be used for specific workloads that will benefit from increased performance. Memory-optimized tables and indexes are completely new data structures, different from disk-based counterparts. SQL Server does not take locks or latches on these structures during reading or writing. They have potential to significantly improve online transaction processing performance. In-memory engine provides tables and indexes on which no latches or locks are required during concurrent access. It also provides natively compiled stored procedures.

Intro to In-Memory OLTP in SQL Server

In-Memory OLTP is integrated with SQL Server to provide a seamless experience in all areas such as development, deployment, manageability, and supportability. A database can contain in-memory as well as disk-based objects.

Rows in memory-optimized tables are versioned. This means that each row in the table potentially has multiple versions. All row versions are maintained in the same table data structure. Row versioning is used to allow concurrent reads and writes on the same row.

Transact-SQL code can be migrated to natively-compiled stored procedures, which are essentially machine code that can be executed immediately by the CPU, without the need for any further compilation or interpretation. While only a limited subset are supported at the moment, these natively-compiled procedures generate far fewer CPU instructions for a given task than the equivalent interpreted T-SQL stored procedure.

All of which gives organizations the opportunity to create high speed and scalable enterprise and web-scale applications and databases.

So what’s the promise?

In-Memory OLTP sql server is based on four pillars, each of which contributes an important element to the whole.

- With tables in memory rather than on disk, the time to access those tables is considerably reduced, leading to higher performance.

- Because T-SQL is compiled to machine code, natively compiled stored procedures use fewer instructions, so business logic processing is considerably faster.

- Optimistic multi-version concurrency control removes the need for the latches and locks that are necessary in disk-based tables to ensure transactional integrity, but can cause contention and blocking for highly concurrent workloads. Instead, when rows are modified, new versions are created that use time-stamps for validation to ensure full ACID support.

- In-Memory OLTP is fully integrated with SQL Server and is managed with a similar set of tools.

The end result of these four pillars is that using In-Memory OLTP, and memory-optimized tables, can be a lot faster than disk-based tables, and yet the two can happily co-exist in the same database and a client application can access data in both without needing to be aware of this fact.

What are the benefits?

For in-memory tables, Added Benefits are the way SQL Server handles the latches and locks. According to Microsoft, the engine uses an optimistic approach for this, meaning it does not place locks or latches on any version of updated rows of data, which is very different than normal tables. It’s this mechanism that reduces contention and allows the transactions to process exponentially faster. Instead of locks, In-Memory uses Row Versions, keeping the original row until after the transaction is committed. Much like Read Committed Snapshot Isolation (RCSI), this allows other transactions to read the original row, while updating the new row version. The In-Memory structured version is page-less and optimized for speed inside active memory, giving a significant performance impact depending on workloads.

SQL Server also changes its logging for these tables. Instead of fully logging, this duality of both on disk and in memory versions (row versions) of the table allows less to be logged. SQL Server can use the before and after versions to gain information it would normally acquire from a log file. In SQL Server 2019, the same concept applies to the new Accelerated Data Recovery (ADR) approach to logging and recovery.

Where are those pitfalls?

In-memory tables have increased performance, but some features of disk-based tables are not available. Functions like replication, mirroring, and database snapshots are not supported. The data in in-memory tables is limited to 250 GB per server. In-Memory OLTP sql server does not recognize certain statements, such as ALTER TABLE, CREATE INDEX, DROP INDEX, and ALTER INDEX. Therefore, existing disk-based tables cannot be altered to be memory-optimized, and memory-optimized tables cannot be altered once created. FOREIGN KEYS and CHECK CONSTRAINTS are not supported. Many T-SQL operators and functionalities, such as OR, LIKE, BETWEEN, and OUTER JOIN, are not available. This limits developers and business logic in stored procedures. Disk-based tables cannot be accessed from natively compiled stored procedures, reducing performance gains when accessing both disk-based and in-memory tables.

Features not supported for in memory tables to keep in mind –

- Replication

- Mirroring

- Linked Servers

- Bulk Logging

- DDL Triggers

- Minimal Logging

- Change Data Capture

- Data Compression

- Foreign Keys (Can only reference other Memory Optimized Table PKs)

- ALTER TABLE

- CREATE INDEX

- TRUNCATE TABLE

- DBCC CHECKTABLE

- DBCC CHECKDB

Scenarios Not Supported with In-Memory OLTP SQL Server

- Accessing memory-optimized tables by using the context connection from inside CLR stored procedures.

- Keyset and dynamic cursors on queries accessing memory-optimized tables. These cursors are degraded to static and read-only.

- Using MERGE INTOtarget, where target is a memory-optimized table, is unsupported.

- MERGE USING source is supported for memory-optimized tables.

- The ROWVERSION (TIMESTAMP) data type is not supported.

- Auto-close is not supported with databases that have a MEMORY_OPTIMIZED_DATA filegroup

- Transactional DDL, such as CREATE/ALTER/DROP of In-Memory OLTP objects, is not supported inside user transactions.

- Event notification.

- Policy-based management (PBM).

- Prevent and log only modes of PBM are not supported. Existence of such policies on the server may prevent In-Memory OLTP DDL from executing successfully. On demand and on schedule modes are supported.

- Database containment (Contained Databases) is not supported with In-Memory OLTP.

- Contained database authentication is supported. However, all In-Memory OLTP objects are marked as breaking containment in the dynamic management view (DMV) dm_db_uncontained_entities.

What are the prerequisites to start using memory-optimized tables?

To use Memory-Optimized Tables (MOTs) in SQL Server, certain requirements must be met. The SQL Server must be at least the 64-bit Developer or Enterprise edition, and must be running version 2014 or later. If using Azure SQL Database, it must be running at least version V12. The In-Memory OLTP feature is not a separate component and is available as soon as the SQL Server engine is installed. It is also important to have enough memory available, which can be estimated based on the size of the data to be stored in memory. However, due to the memory needs of the row-versioning strategy used for Memory-Optimized Tables and to support table changes, Microsoft recommends having at least double the estimated table size in memory.

The memory provisioning is not that straightforward: remember that if we have other databases or tables in the same server, whether or not they are using the In-memory OLTP feature, we will need to provide memory for those components as well. If the system is sharing its resources, we will need to take that into account.

Recommended Memory = ( Estimated MOT Data Size x 2 ) + Estimated Memory for other components

Enable Resource Governor in SQL Server to control memory usage of Memory-Optimized Tables (MOTs) to prevent server-wide “low memory” issues. Ensure enough free disk space, at least double estimated size of MOTs. Use dedicated disk for In-Memory OLTP, enable Instant File Initialization (IFI), and use fast disk for best performance.

Memory-optimized Table – two different approaches

There are two types of memory-optimized tables in SQL Server: durable and non-durable. Both types are fully in-memory, meaning all table rows, whether active or not, are stored in memory while the SQL Server process is running and all read and write operations are performed directly in memory. The main difference between the two types is how the rows are managed and their data lifecycle, which can affect performance. Durable memory-optimized tables behave similarly to traditional disk-based tables, keeping the data even if the server or instance is restarted.

This is achieved by logging and storing operations targeting the table on the disk. This can have a negative impact on performance, but a delayed durability strategy can be used to minimize this impact by saving the data to the disk after the user has received a success message. However, this strategy does not guarantee data safety in the event of a server crash. Non-durable memory-optimized tables, on the other hand, do not store data on the disk and are therefore lost if the server or instance is restarted. By default, memory-optimized tables are created as durable, but there is a recommended limit of 2 TB per database for durable tables starting in SQL Server 2016. This limit is not a hard limit, but rather a recommendation based on Microsoft’s testing.

The Other Type

Durable MOTs are saved to disk and can survive a server crash or restart, while Non-Durable MOTs are volatile and do not need to be logged or saved to disk, which allows for faster performance. However, this also means that data in Non-Durable MOTs will be lost if the server crashes or restarts. Both types of MOTs can be used in databases with traditional disk-based tables, and it is possible to have both types in the same database. In earlier versions of In-Memory OLTP in SQL Server, MOT tables could not be altered after creation, but this changed in SQL Server 2016 with the introduction of the ALTER TABLE statement. However, changing a MOT table requires double the amount of memory currently being used by the table. SQL Server 2016 also introduced the ability to use Foreign Keys and Nullable columns in MOTs.

Memory-optimized Table Variable – What is that?

Taking advantage of Memory-Optimized Table Type (MOT Type), another structure compiled and optimized to work in memory, it is possible to create a Memory-Optimized Table Variable (MOT Variable).

A MOT Variable is similar to a traditional Table Variable, the main difference is that on this kind of object the disk is not used, just the memory. This way, there’s no TempDB access or any sort of disk I/O.

Another advantage is that MOT Variables are using the same structure of MOTs, which are highly optimized to work in-memory. The memory allocated to MOT Variables is freed up as soon as the variable is out of scope, even the invalid rows.

Native Compilation

On In-Memory OLTP, the “Native Compilation” is the process of converting the T-SQL code into native code, which brings the benefit of a more efficient data access and query execution, since – unlike traditional SQL Server objects – the code is no longer interpreted during the execution phase.

Both Memory-Optimized Tables and Memory-Optimized Table Types are compiled, resulting in a DLL file. Those DLLs are recompiled on every database or instance restart, based on the metadata stored in the database.

Row structure and Indexes

Pages and Extents are part of the structure of traditional disk based tables. The rows for this kind of table are identified by page number and offset. This is not true of Memory-Optimized Tables.

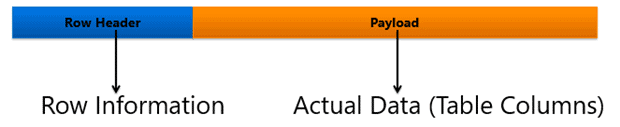

In order to provide a better efficiency, the rows for MOTs are organized differently, as below:

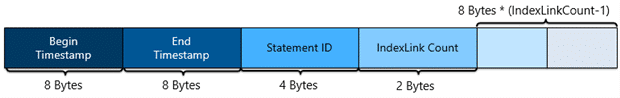

In order to allow concurrent reads and writes, rows from MOTs are versioned, resulting in the following structure for the Row Header:

Begin and End Timestamps control row visibility. Begin Timestamp is the row creation time. End Timestamp marks row as “removed” but it stays in memory until the Garbage Collector removes it. Row can be removed with DELETE or UPDATE statement. UPDATE creates new row with modified data. Aborted INSERT transactions can also delete rows. Statement ID is unique ID for statement that created row. IndexLink Count is number of indexes referencing row. Other slots store index pointers linking rows in table. Every MOT must have at least one index. Rows in MOTs are stored in memory heaps, not in close proximity like disk-based tables. This means rows can be spread randomly in memory. There are two main areas: Header and Payload. Row structure for MOT is different from disk-based tables to optimize memory-based performance.

Deeper Look

The Payload area contains the table columns, but what about the row header? Let’s take a deeper look:

- Begin and End Timestamp – In short, those 8-byte fields are going to store, respectively, the timestamp for the transaction that created the row and the transaction that deleted the row. But this is not that simple, there are some important details:

- The timestamp is that point in time when the transaction finishes. For this reason, both the Begin and End Timestamps are temporarely holding the “Global Transaction ID”, which is a unique incremental value that identifies the transaction. The “Global Transaction ID” is recycled when the instance is restarted.

- When a row is created, there’s (obviously) no “End Timestamp”, this way the infinity (â) value is set.

- Not only does a DELETE statement remove a row, but so also does an UPDATE. When a row is changed, a DELETE followed by an INSERT is performed under the hood.

- Statement ID – This is a 4-byte field that stores the unique ID of the statement that generated the row.

- IndexLink Count – As its name suggests, this 2-byte field stores the number of Indexes referencing this row. Every table must have at least one index, which is required in order to link its rows.

- Pointers – The remaining portion of the row header is filled with “Index Pointers”. The number of pointers is the same as the number of indexes referencing the row, which is tracked by the “IndexLink Count” field. In SQL Server 2014, a table could not be modified, and this was also true of its indexes; it wasn’t possible to change, remove or add indexes to a MOT. The reason behind this is that index pointers were part of the row structure. Happily, this limitation was removed on SQL Server 2016.

Memory-Optimized File Group

In order to create Memory-Optimized Objects, it is a requirement that a Memory-Optimized Filegroup (MOFG) has already been created in the instance.

A MOFG is a kind of Filegroup based on FILESTREAM, and its role is to hold containers in which the durable Memory-Optimized Tables will store their data. Even if you are planning to just use Non-Durable MOTs, the existence of a MOFG is still required.

A MOFG can have several containers where Checkpoint Files will be stored. Those kind of files are used to keep track of the data for all durable tables.

Some facts about MOFG:

- Even though they are based on FILESTREAM, there’s no need to enable this in order to create a MOFG.

- A database can have only one MOFG.

- A MOFG may hold multiple containers, which is a pointer to an OS folder.

- From SQL Server 2016, each container have its own Offline Ceckpoint Thread, allowing a better scalability when persisting the data.

- It is a good practice, in terms of I/O, to have the containers created in different disks.

Let’s talk deployment

One of the challenges with using In-Memory tables in SQL is the complexity of deploying them initially and making subsequent changes. This has become a longer and more complicated task, even with the use of a deployment tool like SQL Compare. When deploying an In-Memory table, for instance, memory-optimized objects cannot be deployed within a transaction. Instead, a two-step process is required where they are deployed without transactions first, before deploying the remaining objects with transactions.

Additionally, memory-optimized tables and natively compiled stored procedures cannot be deployed if DDL triggers and event notifications are being used to monitor certain schema operations, which is common in audited environments. In this case, the DDL triggers and event notifications need to be removed and recreated after the In-Memory OLTP objects are deployed, which affects auditing procedures. Similarly, if a change to the schema or index of a memory-optimized table is needed, the table has to be rebuilt using a two-step process because statements like ALTER TABLE and ALTER INDEX are not supported for memory-optimized tables.

Performance and Scalability

The following factors will affect the performance gains that can be achieved with In-Memory OLTP:

Communication: An application with many calls to short stored procedures may see a smaller performance gain compared to an application with fewer calls and more functionality implemented in each stored procedure.

Transact-SQL Execution: In-Memory OLTP achieves the best performance when using natively compiled stored procedures rather than interpreted stored procedures or query execution. There can be a benefit to accessing memory-optimized tables from such stored procedures.

Range Scan vs Point Lookup: Memory-optimized nonclustered indexes support range scans and ordered scans. For point lookups, memory-optimized hash indexes have better performance than memory-optimized nonclustered indexes. Memory-optimized nonclustered indexes have better performance than disk-based indexes.

- Starting in SQL Server 2016, the query plan for a memory-optimized table can scan the table in parallel. This improves the performance of analytical queries.

- Hash indexes also became scannable in parallel in SQL Server 2016.

- Nonclustered indexes also became scannable in parallel in SQL Server 2016.

- Columnstore indexes have been scannable in parallel since their inception in SQL Server 2014.

Index operations: Index operations are not logged, and they exist only in memory.

Concurrency: Applications whose performance is affected by engine-level concurrency, such as latch contention or blocking, improves significantly when the application moves to In-Memory OLTP.

What are The Use Cases?

Typical instances of businesses that could benefit include those with a high data insert rate such as power companies recording a constant stream of information from smart meters; websites with a large number of users accessing the same data from, say, online magazines; or website gaming platforms where low latency is business-critical.

For those who want, perhaps need, those performance advantages, the journey to In-Memory OLTP is not a straightforward migration. It is a worthwhile one, however, provided the deployment issues are understood first.

SQL Server In-Memory OLTP is a feature that stores data and indexes in memory instead of on disk to improve performance for certain workloads. It includes Memory-Optimized Tables (MOTs) and Natively Compiled Stored Procedures. MOTs use lock-free data structures for high concurrency and low contention. Natively Compiled Stored Procedures are optimized for memory execution and can access both MOTs and traditional tables. In-Memory OLTP requires at least SQL Server 2014 and can be used in all SQL Server editions. It should only be used for workloads that will benefit from increased performance.

In-memory OLTP summarised

In-Memory OLTP is fully integrated with SQL Server and is managed with similar tools. It is based on four pillars: faster memory table access, faster business logic processing with natively compiled stored procedures, optimistic multi-version concurrency control, and integration with SQL Server. It can improve the performance of high-concurrency workloads and create high-speed and scalable enterprise and web-scale applications and databases.

There are two types of Memory-Optimized Tables (MOTs): durable and non-durable. Both types are fully in-memory and perform read and write operations directly in memory, but durable MOTs are saved to disk and can survive a server crash or restart, while non-durable MOTs are volatile and do not need to be saved to disk, resulting in faster performance but also data loss in the event of a crash or restart. It is also possible to create Memory-Optimized Table Variables (MOT Variables), which are similar to traditional table variables but use the same structure as MOTs and are optimized for in-memory performance.

In-Memory OLTP also introduces the concept of native compilation, which converts T-SQL code into native code for more efficient data access and query execution. MOTs have a different row structure and use versioning to allow for concurrent reads and writes. They also support hash and range indexes, and it is possible to create Non clustered indexes on memory-optimized table types.