In C#, a Thread is a low-level construct for creating and managing threads, while a Task is a higher-level construct for creating and managing asynchronous operations.

Understanding Threads and Tasks in Asynchronous Programming is an essenti- al topic in modern software development that focuses on the use of concurrent programming constructs—primarily threads and tasks—in enhancing application performance and responsiveness. With the increasing complexity of applications,

particularly in environments like .NET, mastering these concepts has become crucial for developers who aim to create efficient, scalable, and user-friendly applications. The significance of this subject is underscored by its widespread application across various programming paradigms and frameworks, making it a cornerstone of effective software design.

At the core of asynchronous programming are threads, which allow multiple tasks to run concurrently within a single process, thereby optimizing CPU utilization. In environments such as C# and Java, different thread types—foreground and back- ground—serve unique purposes in managing application lifecycles. However, direct thread management can be cumbersome and resource-intensive, leading to the adoption of higher-level abstractions like Tasks. Introduced in .NET 4 through the Task Parallel Library (TPL), Tasks simplify concurrent programming by allowing developers to focus on the tasks themselves rather than the complexities of thread management, thus fostering cleaner and more maintainable code structures.

The rise of asynchronous programming techniques is further propelled by the use of keywords such as and , which facilitate non-blocking code execution. This paradigm shift significantly enhances application responsiveness, particularly for I/O-bound operations, by allowing other tasks to continue while waiting for time-consuming operations to complete. Notably, asynchronous programming also poses challenges, particularly in transaction management and exception handling within distributed systems, necessitating careful design and implementation strategies to maintain data integrity and consistency.

In summary, understanding Threads and Tasks in asynchronous programming is not only about grasping their technical mechanics but also about appreciating

their broader implications in developing high-performance applications. As software architectures evolve—particularly with the emergence of microservices and cloud computing—effective use of these constructs becomes paramount for achieving scalability, improved user experiences, and efficient resource utilization in today’s increasingly complex software ecosystems.

Let’s Look at Basics First!

A Thread represents a single execution thread, and provides methods for starting, stopping, and interacting with the thread. You can use Thread to create new threads, set their priority, and manage their state. When you create a new thread, it begins executing the method you specify.

A Task represents an asynchronous operation that can be started and awaited. A Task can be used to execute a piece of code in the background, and the Task object can be used to track the status and results of the operation. When you create a new task, it begins executing the method you specify on a new thread, but you can also use the Task.Run method to run the task on a thread pool thread instead of creating a new thread.

In general, you would use Thread when you need fine-grained control over the execution of a piece of code, such as when you need to manage the thread’s priority or set the thread’s state. On the other hand, you would use Task when you need to perform an asynchronous operation, such as when you need to perform an I/O-bound operation, or when you need to perform a piece of computation in the background.

It is also worth mentioning that Task is part of the TPL (Task Parallel Library) and provides more functionality than Thread, such as support for continuation, exception handling, and cancellation.

Thread: Deep Dive

Creating and managing threads at a low level involves working with the operating system’s threading API, which typically includes functions for creating and destroying threads, setting thread priorities, and synchronizing access to shared resources.

Here are some of the low-level details involved in creating and managing threads:

- Thread creation: The operating system provides a function for creating a new thread. This function takes a pointer to a function (often called the “thread function”) that will be executed by the new thread, as well as an optional argument that will be passed to the thread function. The operating system also allows to set the thread’s priority and stack size.

- Thread scheduling: The operating system schedules threads to run on a CPU by using a scheduler. The scheduler assigns a time slice to each thread and switches between threads based on their priority and other factors.

- Thread synchronization: When multiple threads are executing concurrently, they may need to access shared resources, such as memory or file handles. To ensure that these resources are accessed safely and consistently, the operating system provides synchronization primitives such as semaphores, mutexes, and critical sections.

- Thread termination: The operating system provides a function for terminating a thread. A thread can also terminate itself by returning from its thread function, but it’s also possible to use the abort method which is not recommended.

- Thread communication: The operating system provides a way for threads to communicate with each other. For example, Windows provides events, semaphores and message queues. On the other hand, Linux provides pipes and message queues.

- Thread exception handling: Each thread has its own exception handler and the operating system provides a way to handle exceptions within a thread.

Background

Overview of Concurrent Programming in C

In the context of C#, concurrent programming is facilitated through various constructs such as Tasks, Threads, and BackgroundWorkers. Each of these approaches has its unique characteristics and use cases, providing developers with tools to efficiently run concurrent code. With the increasing complexity of applications, especially in modern .NET environments, there has been a noticeable shift towards using Tasks due to their simplicity and effectiveness in managing asynchronous operations[1].

Threads in .NET

Threads in .NET are classified into two types: foreground and background threads. Foreground threads keep the application alive until they complete their execution, whereas background threads do not prevent the application from terminating when the main thread finishes[2]. This distinction is crucial for managing application life- cycles effectively, especially in scenarios where you want the application to exit gracefully while still allowing background operations to complete. For instance, when creating threads in .NET, it is essential to specify whether a thread is a foreground or background thread to control the application’s termination behavior correctly[2].

BackgroundWorker

The BackgroundWorker component is a higher-level abstraction designed for con- current execution in C#. It is particularly useful in GUI applications, such as those built with WinForms, where long-running tasks can be offloaded to a background thread to keep the user interface responsive. One of the key advantages of the BackgroundWorker is its built-in support for cancellation, progress reporting, and exception handling, making it a suitable choice for developers needing to manage user interactions alongside background processes[1][3].

Tasks

Tasks are a fundamental concept introduced in .NET 4 as part of the Task Parallel Library (TPL) and represent a high-level abstraction for asynchronous operations. They allow developers to encapsulate a unit of work that can be executed either asynchronously or in parallel with other tasks, focusing on what needs to be done rather than the intricacies of thread management[4][1]. This abstraction significantly

simplifies concurrent programming by abstracting away the low-level details associ- ated with directly managing threads.

Task-Based Approach

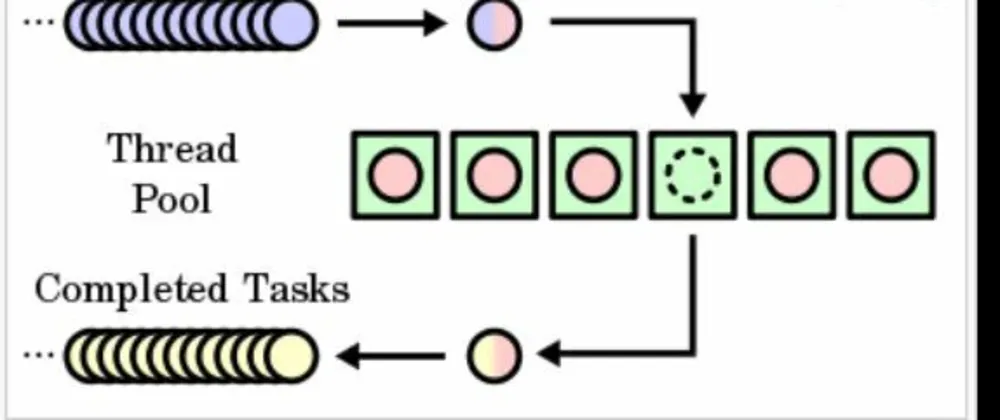

The task-based approach in software engineering dynamically creates tasks that can be managed by a task manager. This strategy allows for a flexible execution model where tasks are picked up and executed by threads in a thread pool, enabling a more efficient and responsive application design[5]. Unlike traditional methods where tasks are executed sequentially, this model promotes a succession of tasks that can trigger the execution of subsequent tasks upon their completion. This design is particularly beneficial in scenarios where the duration of tasks is unpredictable and helps manage loosely coupled operations effectively[5].

Characteristics of Tasks

Tasks are characterized by several key features that enhance their utility in concurrent programming:

Independence: Each task represents an independent unit of work, ensuring that two active tasks do not block one another. If conflicts arise, they cannot be executed simultaneously[6].

Dynamic Execution: Tasks can be dynamically assigned and executed by available worker threads, facilitating a more efficient workload distribution[6].

Built-in Support: Tasks provide built-in support for critical features such as cancel- lation tokens, progress reporting, and exception handling, akin to the Background- Worker class[1][7]. This makes tasks easier to work with compared to lower-level threads, which require more explicit management.

Advantages Over Threads

While both tasks and threads are used for concurrent execution, tasks offer several advantages:

Resource Management: Tasks utilize a thread pool managed by the .NET runtime, minimizing overhead and enhancing resource utilization. Threads, on the other hand, are managed by the operating system and can be more resource-intensive to create and manage explicitly[7].

Asynchronous Programming: The async/await keywords integrated with tasks enable developers to write asynchronous code that behaves like synchronous code, improv- ing the clarity and maintainability of applications[8].

Simplified Code Structure: The task-based model allows developers to avoid complex thread management logic, leading to cleaner and more readable code[4][1].

Implications of Asynchronous Programming

Asynchronous programming offers significant advantages in modern software devel- opment. By enabling tasks to execute independently, it enhances the performance of applications, particularly for I/O-bound operations. This approach allows applications to remain responsive and manage multiple operations concurrently, reducing idle time that can occur during synchronous execution[9][10]. The shift towards asyn- chronous programming models is reshaping how developers architect applications, leading to a more scalable and efficient software ecosystem.

Threads

Threads are fundamental units of execution that allow multiple tasks to run con- currently within a single process, enhancing the efficiency of program execution and CPU utilization. A thread comprises a program counter, a stack, and a set

of registers, and it results from forking a program into multiple running tasks. This structure enables threads to share resources, such as memory, which distinguishes them from separate processes that do not share these resources[11][12].

Asynchronous Programming

Asynchronous programming is a programming paradigm that allows developers to execute tasks concurrently without blocking the main thread of execution. This ap- proach is particularly beneficial for handling I/O-bound operations, such as network requests or file access, where waiting for operations to complete would otherwise lead to idle time in an application. By using constructs such as and , developers can write non-blocking code that enhances application responsiveness and perfor- mance[13][14].

Key Concepts of Asynchronous Programming

At its core, asynchronous programming enables a program to initiate potentially time-consuming operations and proceed with other tasks without waiting for those operations to finish. This is in contrast to synchronous programming, which executes tasks sequentially, ensuring that each operation waits for the previous one to com- plete. While synchronous programming can offer simplicity and ease of debugging due to its linear execution flow, it often leads to reduced responsiveness in applica- tions that perform lengthy operations[15][16].

Benefits of Asynchronous Programming

The primary advantages of asynchronous programming include:

Improved Responsiveness: Applications can handle multiple requests simultaneous- ly, which is essential for web applications or services that must respond quickly to user actions[16].

Enhanced Resource Utilization: By allowing tasks to run concurrently, asynchronous programming can lead to better performance, especially in scenarios where I/O operations are common[13].

Scalability: Asynchronous programming models can scale better than synchronous models, allowing applications to manage a higher volume of operations with less resource contention[14].

Practical Implementation

In C#, asynchronous programming is often implemented using the and keywords, which simplify the management of asynchronous operations. For example, when fetching data from a database or making a network call, developers can mark methods as , allowing them to perform other operations while waiting for the task to complete[13][14]. Additionally, frameworks such as Entity Framework Core pro- vide rich asynchronous methods that further enhance application performance and responsiveness[16].

Common Use Cases

Asynchronous programming is particularly useful in scenarios that involve waiting for external responses, such as:

Web Applications: Where multiple user requests need to be processed concurrently.

Mobile Apps: To maintain smooth user interfaces while performing background tasks like data synchronization.

APIs and Services: To handle high throughput and provide responsive endpoints for clients[13][9].

Frameworks and Languages

Coroutines

Coroutines are a programming construct that allows developers to write asynchro- nous code in a sequential manner, closely resembling synchronous programming. This design leads to improved readability and maintainability of code, making it easier for developers to understand and follow the flow of execution[17][18]. Many programming languages, including Python with its library and Kotlin with , provide robust frameworks that abstract the complexity of managing coroutines and enable more straightforward implementations of asynchronous tasks[17].

Threads

Threads have long been a foundational concept in concurrent programming, provid- ing a means for parallel execution within applications. Due to their extensive support across various programming languages, threads are accessible and widely used.

They allow developers to create multi-threaded applications that can perform multiple tasks simultaneously, thus improving performance and responsiveness[17][19]. How- ever, debugging threaded applications can pose challenges due to potential thread

synchronization issues and race conditions, which can be difficult to identify and reproduce[17][18].

Task Parallel Library (TPL)

The Task Parallel Library (TPL) is an essential set of APIs and types in .NET designed to simplify the development of parallel and asynchronous programming. It includes fundamental types such as and , which represent asynchronous operations that can be cancelled or awaited[20]. The TPL is particularly beneficial for CPU-bound tasks, enabling developers to run complex algorithms or process large datasets concurrently, thereby enhancing application performance[20].

Asynchronous Programming Techniques

Asynchronous programming techniques introduce concurrency into applications, allowing tasks to progress independently without requiring simultaneous execution.

This is achieved by enabling a task to pause while waiting for other tasks to

run, effectively managing application responsiveness, especially in scenarios that involve I/O operations or event-driven programming[18][21]. In environments like JavaScript, while the language operates on a single-threaded model, asynchronous programming can still help manage concurrent tasks using features like promises and async/await constructs[22][23].

Use Cases

Data Processing

For applications involving extensive data processing, such as scientific simulations or financial modeling, leveraging asynchronous programming can significantly enhance performance. By utilizing parallelism, developers can break down large computations into smaller tasks, enabling concurrent processing and reducing overall computation time[24]. This is particularly beneficial in scenarios where operations are I/O-bound, allowing other tasks to proceed while waiting for external resources, such as data- bases or APIs, to respond.

Microservices Architecture

Asynchronous programming, particularly through the use of threads and tasks, is crucial in microservices architecture. The Saga pattern, for instance, facilitates data consistency across distributed systems by managing transactions that span multiple services. In this context, a saga is a series of transactions, where each step may involve different microservices, and successful execution relies on effective handling of events and compensating transactions when failures occur[25][26]. This model ensures that while individual transactions within a service uphold the ACID principles (Atomicity, Consistency, Isolation, Durability), the cross-service transactions can still maintain overall system integrity[27].

Exception Handling in Distributed Systems

In distributed architectures, exceptions can arise more frequently due to network issues or service failures. The Saga pattern incorporates compensating transactions to mitigate the impact of such exceptions[28]. This approach not only helps in maintaining system stability but also ensures a smoother user experience, as the system can handle failures gracefully and recover from them without requiring manual intervention.

High-Performance Applications

Asynchronous programming is also vital in developing high-performance applica- tions that require responsiveness and scalability. In scenarios where multiple user interactions occur simultaneously, utilizing threads and tasks allows applications to handle numerous requests efficiently, thereby improving throughput and minimizing latency[4]. This is especially important for web applications that must provide quick feedback to user actions, enabling a seamless user experience.

Cloud Computing and Serverless Architectures

In cloud computing environments, especially those employing serverless architec- tures like Azure Functions, asynchronous programming plays a pivotal role in man- aging stateful workflows. By simplifying the implementation of state management and reducing the overhead associated with it, developers can focus on the core business logic of their applications. This results in enhanced resiliency and efficient handling of transient failures, making it easier to scale applications dynamically based on demand[29].

Best Practices

In the realm of asynchronous programming, adhering to best practices is crucial for developing efficient, reliable, and maintainable applications. The following strategies provide guidance for optimizing the use of threads and tasks in C#.

Transaction Management in Asynchronous Pro- gramming

Transaction management is a critical aspect of asynchronous programming, es- pecially in environments like Spring and Django, where operations may need to occur independently and concurrently. Understanding how to manage transactions effectively while utilizing asynchronous operations is essential for ensuring data integrity and consistency across applications.

Spring Framework

@Transactional Annotation

In the Spring framework, transaction management is primarily handled using the annotation, which can be applied at both the class and method levels. This annotation defines the boundaries of a transaction, allowing developers to specify properties such as propagation, isolation, timeout, and readOnly settings[30]. Common prop- agation behaviors include , , and , which determine how transactions relate to one another within a method context[30].

Challenges with Asynchronous Methods

When combining with asynchronous methods, developers face unique challenges. Asynchronous operations in Spring are initiated using the annotation, which allows method execution to occur in a background thread pool. This means that when an asynchronous method is invoked from within a context, the transaction does not au- tomatically propagate to the new thread executing the asynchronous method[30][16]. Therefore, it’s critical to manage transaction boundaries carefully to prevent issues with data consistency and integrity.

Best Practices

To effectively handle transactions within asynchronous methods, developers should ensure that the method responsible for transaction management is not the same as the one marked with . Instead, the asynchronous method should call another method to establish the transaction context appropriately[30]. This approach helps maintain data consistency while leveraging the benefits of asynchronous execution.

Django Framework

In Django, the transition to asynchronous programming introduces complexities regarding transaction management due to the traditionally synchronous nature of its ORM. The ORM poses limitations when managing transactions in asynchronous con- texts, as the transaction context does not carry over into asynchronous execution[31]. Developers must encapsulate transactional logic carefully and may need to consider alternative strategies to achieve atomicity and data consistency in asynchronous Django applications[31].

Strategies for Asynchronous Transactions

To mitigate the challenges of managing transactions in an asynchronous environ- ment, developers can adopt strategies such as starting and committing transactions explicitly within the asynchronous task. This involves using a separate transaction scope for the asynchronous task, ensuring that database operations are performed in the correct context[32]. Understanding transaction propagation behaviors is also crucial, especially when using frameworks that support behaviors like , which allows for nested transactions within asynchronous processing[32].

Task and Threadpool: What is the relation?

The ThreadPool is a pool of worker threads that can be used to perform tasks concurrently. It is a part of the .NET Framework and it is designed to minimize the overhead of creating and managing threads.

The Task class is built on top of the ThreadPool. When you create a new Task and call the Task.Start() or Task.Run() method, the Task schedules the work to be done by the ThreadPool. ThreadPool then assigns an available thread from the pool to execute the task.

The Task.Run method is a shorthand for creating a new Task and starting it. It creates a new Task and schedules it to be executed on the ThreadPool. It also returns a Task object that can be used to track the status and results of the operation.

Task class provides a higher-level abstraction for working with threads than the Thread class, and it is a part of the TPL (Task Parallel Library) which is a set of libraries and APIs that allow you to write concurrent and parallel code more easily. The TPL abstracts away the low-level details of creating and managing threads, and provides a simpler and more powerful model for working with concurrent and parallel code.

Under the hood, the Task class schedules the work to be done by the ThreadPool, and the ThreadPool assigns an available thread to execute the task. The Task class also provides additional functionality such as support for continuation, exception handling, and cancellation.

Let’s look into an example; shall we?

Here’s an example of how you could use a Task to perform an I/O-bound operation asynchronously:

using System;

using System.Threading.Tasks;

using System.IO;

class Program

{

static void Main()

{

// Start a new task to perform the I/O-bound operation

Task<int> task = ReadFileAsync("file.txt");

// Perform other operations while the I/O-bound operation is in progress

Console.WriteLine("Doing other work...");

// Wait for the task to complete

int fileLength = task.Result;

// Print the length of the file

Console.WriteLine("File length: " + fileLength);

}

static async Task<int> ReadFileAsync(string fileName)

{

using (FileStream stream = new FileStream(fileName, FileMode.Open, FileAccess.Read, FileShare.Read, 4096, true))

{

byte[] buffer = new byte[stream.Length];

await stream.ReadAsync(buffer, 0, buffer.Length);

return buffer.Length;

}

}

}

But what does this code do?

In this example, the ReadFileAsync method starts an I/O-bound operation asynchronously by reading the contents of a file into a byte array using the FileStream class. The ReadFileAsync method is marked as async, which allows the method to use the await keyword. The await keyword is used to indicate that the call to ReadAsync should be made asynchronously.

In the Main method, we start a new task by calling the ReadFileAsync method and passing it the file name. The ReadFileAsync method returns a Task<int> object, representing the ongoing asynchronous operation. The Main method can then continue to execute while the ReadFileAsync method reads the contents of the file in the background.

The Main method then prints “Doing other work…”, to indicate that it is performing other operations while the I/O-bound operation is in progress.

Finally, the Main method uses the Result property of the Task<int> object to wait for the task to complete and get the result of the asynchronous operation, which is the length of the file.

It’s worth noting that in this example, we used the FileStream class, but the same concept can be applied to other classes like WebClient, HttpClient that perform I/O bound operations.

Task.start vs Task.run

Task.Start() is used to start a task that has been created using the new keyword, and it schedules the task to be executed by the ThreadPool. It’s important to note that calling Start() on a task that is already running or has already completed will result in an exception being thrown.

On the other hand, Task.Run() is a shorthand for creating a new task and starting it. It creates a new Task and schedules it to be executed on the ThreadPool, it also returns a Task object that can be used to track the status and results of the operation. This method is a convenient way to create and start a new task in one line of code.

Await a minute!

The await keyword is used to indicate that the method should be executed asynchronously. When the await keyword is used, the method returns a Task or Task<T> object, which represents the ongoing asynchronous operation. The await keyword does not create a new thread, instead, it allows the calling method (in this case, ReadFileAsync) to yield execution back to the calling method (in this case, Main) while the asynchronous operation (in this case, ReadAsync) is in progress.

When the await keyword is used with a method that returns a Task or Task<T>, the compiler generates code that:

- Checks the status of the task, if the task is already completed, it proceeds with the next statement.

- If the task is not completed, the method registers a continuation to be executed when the task completes and returns control to the calling method.

In the ReadFileAsync example, the ReadAsync method is an asynchronous method, it returns a Task that represents the ongoing I/O operation. The await keyword is used to tell the compiler to schedule the continuation of the ReadFileAsync method after the ReadAsync task completes. By doing so, the ReadFileAsync method releases the thread to perform other tasks while the I/O operation is in progress, so the other parts of the code can continue executing without waiting for the I/O operation to complete.

For more post like this; you can also follow this profile – https://dev.to/asifbuetcse